As the landscape of artificial intelligence evolves, ensuring the safety and alignment of superintelligent language models (LLMs) is paramount. This workshop will delve into the theoretical foundations of LLM safety. This could include topics like the Bayesian view of LLM safety versus the RL view of safety and other theories.

The flavor of this workshop is futuristic, focusing on how to ensure a superintelligent LLM/AI remains safe and aligned with humans. This workshop is a joint effort of the Simons Institute and IVADO.

Key Topics:

- Bayesian Approaches to LLM Safety

- Reinforcement Learning Perspectives on Safety

- Theoretical Frameworks for Ensuring AI Alignment

- Case Studies and Practical Implications

- Future Directions in LLM Safety Research

This workshop is part of the programming for the thematic semester on Large Language Models and Transformers, organized in collaboration with the Simons Institute for the Theory of Computing.

All travel grants to attend the event in California have been awarded.

Workshops will also be available online and live (registration required).

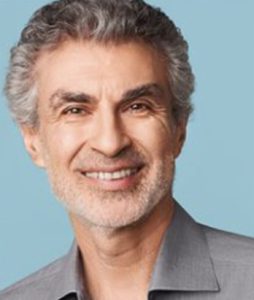

Organizers

AGENDA

Monday, Apr. 14th, 2025

9 – 9:15 a.m.: Coffee and Check-In

9:15 – 9:30 a.m.: Welcome Address

9:30 – 10:30 a.m.: Simulating Counterfactual Training

Roger Grosse (University of Toronto)

10:30 – 11 a.m.: Break

11 a.m. – 12 p.m.: AI Safety via Inference-Time Compute

Boaz Barak (Harvard University and OpenAI)

12 – 2 p.m.: Lunch (on your own)

2 – 3 p.m.: Controlling Untrusted AIs with Monitors

Ethan Perez (Anthropic)

3 – 3:30 p.m.: Break

3:30 – 4:30 p.m.: Scalable AI Safety via Efficient Debate Games

Georgios Piliouras (Singapore University of Technology and Design)

4:30 – 6 p.m.: Reception

Tuesday, Apr. 15th, 2025

9:30 – 10 a.m.: Coffee and Check-In

10 – 11 a.m: Full-Stack Alignment

Ryan Lowe (Meaning Alignment Institute)

11 – 11:30 a.m.: Break

11:30 a.m. – 12:30 p.m.: Can We Get Asymptotic Safety Guarantees Based On Scalable Oversight?

Geoffrey Irving (UK AI Security Institute)

12:30 – 2:30 p.m.: Lunch (on your own)

2:30 – 3:30 p.m.: Amortised Inference Meets LLMs: Algorithms and Implications for Faithful Knowledge Extraction

Nikolay Malkin (University of Edinburgh)

3:30 – 4 p.m.: Break

4 – 5 p.m.: Special Lecture – Richard M. Karp Distinguished Lecture

Yoshua Bengio (IVADO – Université de Montréal – Mila)

5 – 6 p.m.: Panel Discussion

Yoshua Bengio (IVADO – Mila – Université de Montréal), Dawn Song (UC Berkeley), Roger Grosse, Geoffrey Irving, Siva Reddy (IVADO – Mila – McGill University)

Wednesday, Apr. 16th, 2025

8:30 – 9 a.m.: Coffee and Check-In

9 – 10 a.m.: Robustness of Jailbreaking across Aligned Models, Reasoning Models and Agents

Siva Reddy (IVADO – McGill University – Mila)

10 – 10:15 a.m.: Break

10:15 – 11:15 a.m.: Adversarial Training for LLMs’ Safety Robustness

Gauthier Gidel (IVADO – Université de Montréal – Mila)

11:15 – 11:30 a.m.: Break

11:30am – 12:30 p.m.: Talk By

Zico Kolter (Carnegie Mellon University)

12:30 – 2 p.m.: Lunch (on your own)

2 – 3 p.m.: Causal Representation Learning: A Natural Fit for Mechanistic Interpretability

Dhanya Sridhar (IVADO – Université de Montréal – Mila)

3 – 3:15 p.m.: Break

3:15 – 4:15 p.m.: Out of Distribution, Out of Control? Understanding Safety Challenges in AI

Aditi Raghunathan (Carnegie Mellon University)

Thursday, Apr. 17th, 2025

9 – 9:30 a.m.: Coffee and Check-In

9:30 – 10:30 a.m.: LLM Negotiations and Social Dilemmas

Aaron Courville (IVADO – Université de Montréal – Mila)

10:30 – 11 a.m.: Break

11 a.m. – 12 p.m: Scalably Understanding AI With AI

Jacob Steinhardt (UC Berkeley)

12 – 1:45 p.m.: Lunch (on your own)

1:45 – 2:45 p.m.: Future Directions in AI Safety Research>

Dawn Song (UC Berkeley)

2:45 – 3 p.m.: Break

3 – 4 p.m.: What Can Theory of Cryptography Tell us About AI Safety

Shafi Goldwasser (UC Berkeley)

4 – 5 p.m.: Assessing the Risk of Advanced Reinforcement Learning Agents Causing Human Extinction

Michael Cohen (UC Berkeley)

Friday, Apr. 18th, 2025

8:30 – 9 a.m.: Coffee and Check-In

9 – 10 a.m.: Safeguarded AI Workflows

David Dalrymple (Advanced Research + Invention Agency)

10 – 10:15 a.m.: Break

10:15 – 11:15 a.m.: AI Safety: LLMs, Facts, Lies, and Agents in the Real World

Chris Pal (IVADO + Polytechnique + Mila + UdeM DIRO + CIFAR + ServiceNow)

11:15 – 11:30 a.m.: Break

11:30 a.m. – 12:30 p.m.: Measurements for Capabilities and Hazards

Dan Hendrycks (Center for AI Safety)

12:30 – 2 p.m.: Lunch (on your own)

2 – 3 p.m.: Theoretical and Empirical aspects of Singular Learning Theory for AI Alignment

Daniel Murfet (Timaeus)

3 – 3:30 p.m.: Break

3:30 – 4:30 p.m.: Probabilistic Safety Guarantees Using Model Internals

Jacob Hilton (Alignment Research Center)

4:30 – 4:45 p.m: Closing Remarks